The myth of digital

OR precision versus accuracy

During the first year of college I took a course on measurement. I remember lots of old Weston analog meters with no digital instruments in sight. And we learned that precision is the degree to which a measurement is divided. Accuracy is how close that measurement is to being correct.

Often these terms are misused in my opinion. For example: weapons are now often referred to as "precision guided". It seems to me what they really mean is high accuracy. Dropping a bomb into a pickle barrel, that kind of thing is accuracy. High precision would be a bomb just large enough to destroy the pickle barrel and nothing else. A punch in the nose is high-precision, only one person's nose is involved. I suppose what they are trying to say is that fewer bombs need to be dropped now (due to increased accuracy) than before to apply the required destructive force.

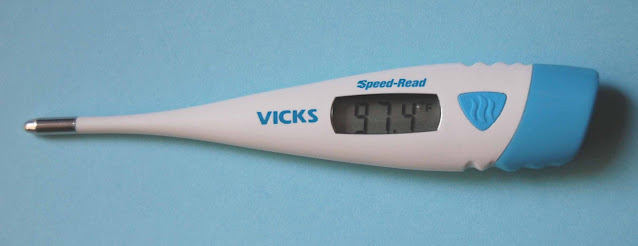

Anyhow, wasn't feeling that great today and thought I might check my temperature. The digital thermometer I have is easy to read, quick and has a precision of 0.1 degree Fahrenheit.

|

| sub normal, that's me |

I also have a mercury thermometer, analog, harder to read, not so fast (I keep it under the tongue for 5 minutes) with 0.2 degree Fahrenheit tick marks. It also has to be "shook down" before use.

|

| sub normal AGAIN ! |

So the question is do I believe a mercury thermometer reading 98.2 F or a digital thermometer reading 97.4 F ?

In this case it doesn't really matter that much - they both are lower than the nominal 98.6 F so I'm assuming I don't have a fever.

I took an aspirin and felt better later, almost broke out the Covid-19 test but decided not to use it.

BTW, the mercury thermometer is a little older than I am (66) (no ageism, please !).

Best Regards,

Chuck, WB9KZY

http://wb9kzy.com/ham.htm